It is a good exercise to analyze how to Bayes Theorem to estimate the posterior probability for each class in a two class scenario.

The decision boundary can be identified as:

Replacing the class conditional probabilities with the gaussian distribution, we can derive that:

Deriving the logarithm of the class posteriors:

Since is independent of the class, it can be dropped, leaving us with:

Therefore, the classifier becomes . And the decision boundary becomes .

The function can be written in the general form:

where

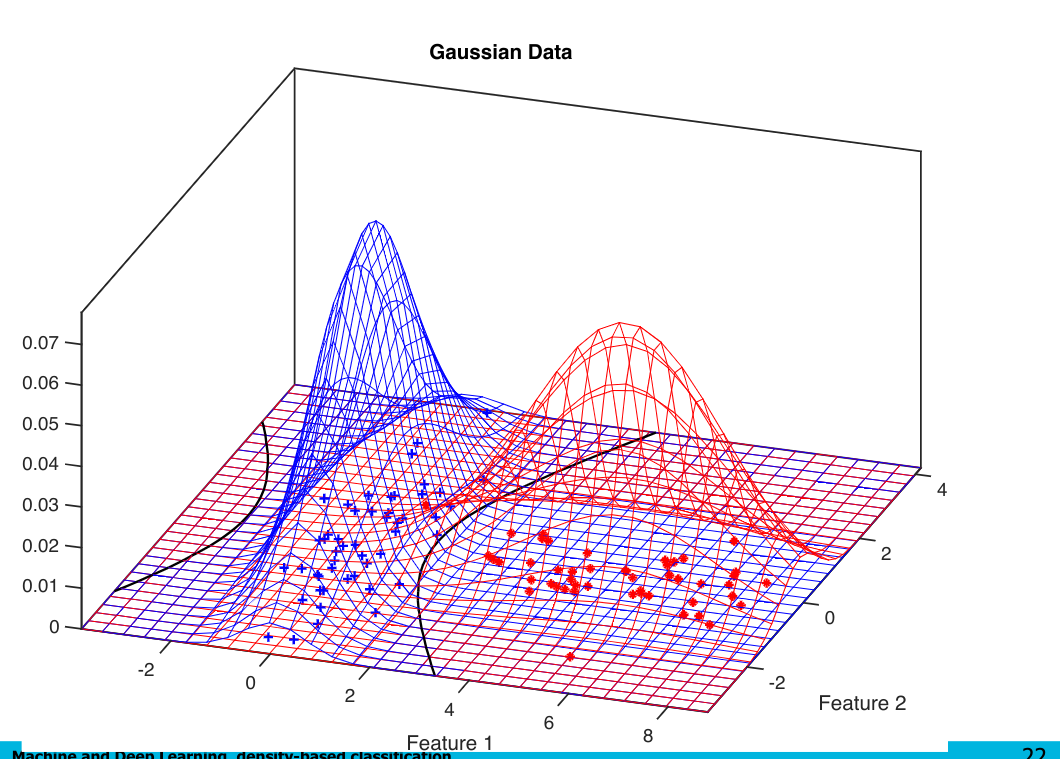

This leaves us with a quadratic decision boundary:

What if the covariance matrix is non invertible?

In the case that one of the variances of a feature’s variance is 0: we can not write an inverse matrix for . So, instead we estimate a single covariance matrix for the entire dataset by averaging over the covariance matrices for all classes:

Since we share a single covariance matrix across all classes, the matrix becomes 0, turning , and making our decision boundary linear.

No covariance matrix 🥺

If you are unable to even estimate a single covariance matrix, one can simply assume that the variance of each feature is the same and are independent from each other. This results in a covariance matrix in the form of: